Supervised Semantic Discovery Laboratory

Supervised Semantic Discovery Laboratory

Supervised Semantic Discovery Laboratory offers functionality related to data processing stage following acquisition and digitization in the Source Laboratory and enrichment of metadata by annotation in the Automated Enrichment Lab. This stage is data binding and discovery of new relationships and thus building a knowledge base for information mining.

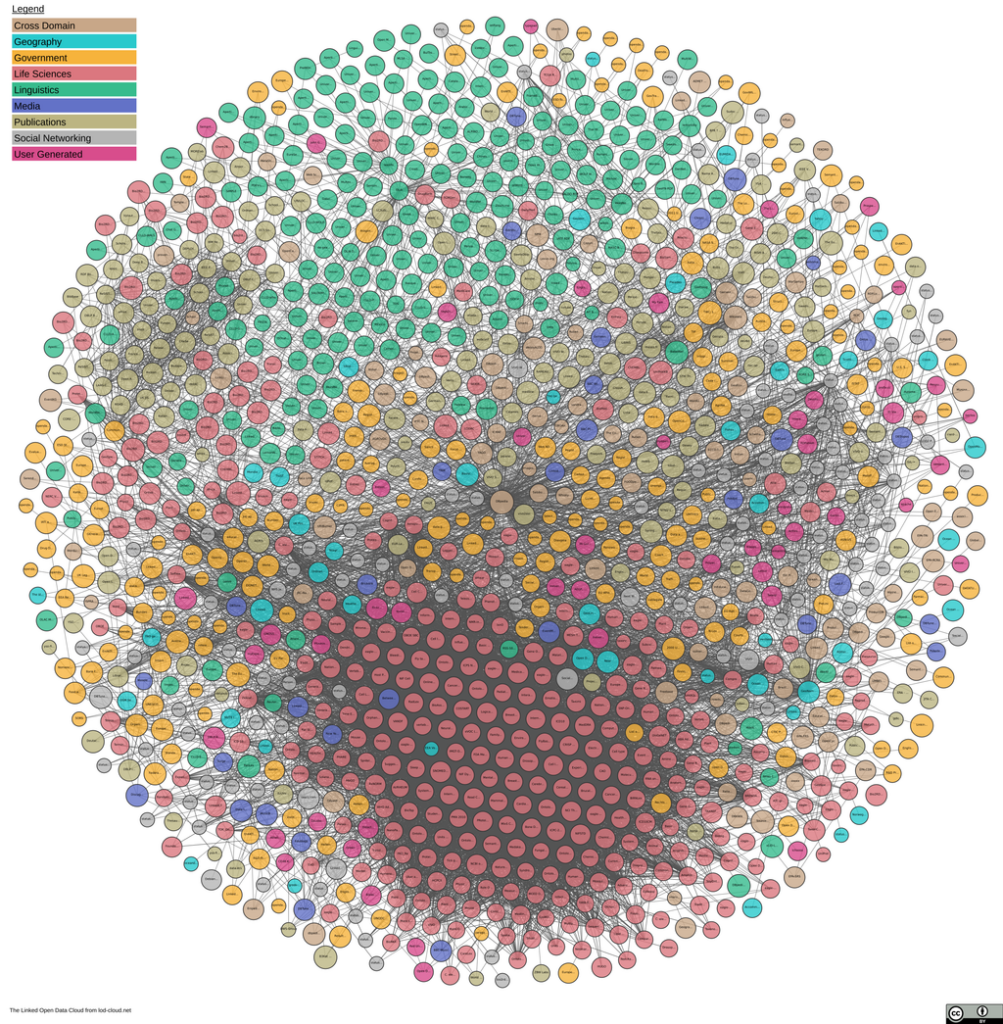

https://lod-cloud.net/, CC BY 4.0 via Wikimedia Commons

Linked Open Data

Different datasets may contain information about the same entity, such as a person or a place. Data binding, in simple terms, involves detecting connection and combining information contained in different sets, thus creating a knowledge base about this entity for further analysis. For this purpose, a machine-interpretable representation of content is not sufficient. What is needed is metadata that describes the content in a semantic sense using available vocabularies and reference data.

It is necessary to ensure not only that content can be “read” by a computer, but also that it can be understood, through appropriate semantic labeling of the content. In addition, the data should be structured in a certain way to enable automatic interpretation and processing. In other words, the data should be prepared and made available according to Linked Open Data (LOD) rules.

What is LOD? Linked Open Data is a way of publishing structured data that allows metadata to be combined and enriched so that different representations or instances of the same information can be found and related resources can be linked. LOD is a term which also refers to linked datasets published according to this principle.

How to Make LOD

Named entities and relevant terms detected in the process of enriching textual materials metadata must be tagged in a way that allows them to be properly interpreted, using universal reference datasets such as VIAF (Virtual International Authority File) for people or GeoNames for geographic names. In this way the same entity, such as a person, appearing in different datasets is assigned the same unique identifier (URI).

The next step in creating a dataset that is a part of LOD is to describe the relationships between tagged elements in the form of triples. A triple contains an entity, a predicate describing the relationship, and an object, following the rules of the Resource Description Framework (RDF) standard defined as a model for representing and exchanging data. Similarly to entities, relationships should also be specified using existing and generally used dictionaries and ontologies.

Use of vocabularies developed for different knowledge domains ensures consistency of data from different collections and enables their binding. RDF is an abstract model that can be formally written using various formats, such as Turtle or RDF/XML. With metadata generated and stored according to the above principles, queries can be formulated in SPARQL languages to retrieve specific information.

The Laboratory will offer a range of LOD-related tools and resources including: unifying data; creating, enriching and sharing dictionary resources; and projecting data onto LOD.

Normalization, Standardization and Unification

Data binding is often hampered by problems related to non-standard data formats, lack of uniform descriptions in metadata or the spatio-temporal dimension of content. These problems can be eliminated by performing normalization, standardization and semantic alignment.

Unification can apply to both data format and its metadata. Non-standard format (e.g., DjVu), can limit the use of that data. Therefore, it is important to make data available in formats that ensure interoperability, such as IIIF/JPG (International Image Interoperability Framework) for images or PDF. Transformation can also apply to the form of the data. For example, data in tabular form, saved in CSV format, should be converted to RDF form, which can be saved in Turtle or RDF/XML format.

Metadata from different sources is diverse not only in terms of description (metadata) schema used, but also in terms of information in the description, i.e., the individual metadata fields. The Laboratory will offer tools to project metadata from one schema to another. The projection of data onto LOD will in turn enable enrichment of metadata with external identifiers by linking named entities and relevant terms from different domains with the LOD network, i.e., using dictionaries and ontologies available in the Dariah.lab infrastructure and other resources.

Semantic-lexical Resources

One of the basic principles of LOD is to describe data by relying on the existing dictionaries, thesauri or ontologies. When it is not possible to express a particular piece of information using the existing terms or relationships, it is necessary to extend the existing resources or to define new ones for a given area of knowledge. The Laboratory will provide semantic and lexical resources, as well as tools for creating, editing and presenting controlled vocabularies, thesauri and ontologies.

Data published on the Internet according to the LOD principles form a large network of interconnected resources – ontologies, thesauri and knowledge bases. LOD include Wikipedia, Princeton WordNet, keywords (e.g., Library of Congress), as well as a variety of specialized thesauri, such as the medical MeSH and astronomical UAT. One of the resources to be made available in Dariah.lab will be the VeSNet developed at the Laboratory – the Polish version of the LOD, including Polish equivalents for hundreds of thousands of terms from a wide variety of lexical resources, and integrated with Słowosieć, which – together with Princeton WordNet – will be the center of VeSNet. The Laboratory will also provide integrated lexical resources for Polish and Medieval Latin. i.e. dictionaries, material directories and language corpora. Another specialized resource will be a collection of aggregated and linked data in literary studies.

The Laboratory will provide tools for creating, expanding and editing existing and new dictionaries in the semantic SKOS (Simple Knowledge Organization System) model. SKOS is one of the Semantic Web standards built on RDF, and its main purpose is to facilitate publication and use of vocabularies as linked data. The vocabularies used to prepare metadata of digital resources can take various forms. So it is important to be able to bind concepts from existing dictionaries and thesauri and consolidate them. In the latter case, it will be possible to project a user-created resource onto similar English-language resources, thus popularizing it.